Boosting Success: Strategies for Personal Growth and Achievement

Boosting Your Performance: Strategies for Success

Whether you’re striving for personal growth, professional success, or academic excellence, finding ways to boost your performance is key to achieving your goals. By implementing effective strategies and making positive changes in your routine, you can enhance your productivity and reach new heights of success.

Set Clear Goals

Start by setting clear and achievable goals that align with your aspirations. Define specific objectives that are measurable and time-bound to give yourself a clear direction and purpose.

Develop a Routine

Create a daily routine that prioritises tasks based on their importance and deadlines. Establishing a structured schedule can help you stay organised, focused, and efficient in managing your time.

Stay Motivated

Find ways to stay motivated and inspired throughout your journey towards success. Whether it’s through positive affirmations, visualising your goals, or seeking support from mentors, maintaining high levels of motivation is essential for sustained performance improvement.

Continuous Learning

Commit to lifelong learning and self-improvement by seeking opportunities to expand your knowledge and skills. Stay updated with industry trends, attend workshops and seminars, or pursue further education to enhance your expertise and stay ahead of the curve.

Embrace Challenges

View challenges as opportunities for growth rather than obstacles to overcome. Embracing challenges with a positive mindset can help you develop resilience, problem-solving skills, and the confidence to tackle any hurdles that come your way.

Seek Feedback

Solicit feedback from peers, mentors, or supervisors to gain valuable insights into areas where you can improve. Constructive feedback allows you to identify strengths and weaknesses in your performance and make necessary adjustments for continuous growth.

In conclusion, boosting your performance requires a combination of strategic planning, discipline, motivation, learning agility, resilience, and feedback integration. By incorporating these strategies into your daily routine and mindset, you can elevate your performance levels and achieve greater success in all aspects of life.

Understanding Boosting in Machine Learning: Key Concepts, Applications, and Techniques Explained

- What is bagging vs boosting?

- What is an example of boosting?

- Why is boosting good?

- What are bagging and boosting?

- What is the difference between bagging and boosting?

- Why is boosting called boosting?

- Why we use boosting?

- How do you use boosting?

- When should you use boosting?

What is bagging vs boosting?

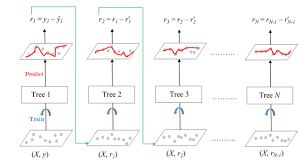

In the realm of machine learning, bagging and boosting are two popular ensemble learning techniques that aim to improve the predictive performance of models by combining multiple base learners. Bagging, short for Bootstrap Aggregating, involves training multiple models independently on different subsets of the training data through bootstrapping and then aggregating their predictions through averaging or voting. On the other hand, boosting focuses on iteratively training a sequence of weak learners, where each subsequent model learns from the mistakes of its predecessor to improve overall prediction accuracy. While both bagging and boosting are ensemble methods used to reduce variance and enhance model generalization, they differ in their approach to building a strong predictive model from multiple weak learners.

What is an example of boosting?

Boosting is a machine learning technique that combines multiple weak learners to create a strong predictive model. One common example of boosting is the AdaBoost algorithm, where each weak learner focuses on the instances that were misclassified by the previous learners. By iteratively adjusting the weights of misclassified instances, AdaBoost creates a robust ensemble model that improves its performance with each iteration. This iterative process of combining weak learners to form a powerful predictive model is a fundamental concept in boosting algorithms, demonstrating how multiple simple models can work together to enhance overall accuracy and predictive capability.

Why is boosting good?

Boosting is beneficial for several reasons. It can enhance productivity by increasing efficiency and effectiveness in achieving goals. By boosting performance, individuals can experience a sense of accomplishment and satisfaction from reaching new milestones and surpassing previous limitations. Additionally, boosting can lead to personal growth and development by pushing individuals out of their comfort zones and challenging them to strive for excellence. Overall, embracing the concept of boosting can result in improved outcomes, increased motivation, and a greater sense of fulfilment in both personal and professional endeavours.

What are bagging and boosting?

Bagging and boosting are two popular ensemble learning techniques used in machine learning to improve the performance of predictive models. Bagging, short for Bootstrap Aggregating, involves training multiple individual models on different subsets of the training data and then combining their predictions through a voting mechanism to make the final prediction. On the other hand, boosting focuses on sequentially training multiple weak learners to correct the errors made by previous models, with each subsequent model giving more weight to the misclassified instances. Both bagging and boosting aim to reduce overfitting, increase model accuracy, and enhance generalisation capabilities by leveraging the diversity of multiple models to make more robust predictions.

What is the difference between bagging and boosting?

When discussing machine learning algorithms, the difference between bagging and boosting lies in their approach to building multiple models. Bagging, short for bootstrap aggregating, involves training each model independently on different subsets of the training data and then combining their predictions through averaging or voting. On the other hand, boosting focuses on sequentially training a series of models, where each subsequent model corrects the errors made by its predecessor, leading to a stronger overall predictive performance. While bagging aims to reduce variance by averaging diverse models’ predictions, boosting aims to reduce bias by iteratively improving the model’s accuracy on difficult-to-predict instances.

Why is boosting called boosting?

The term “boosting” in the context of performance enhancement or algorithmic learning is derived from its fundamental concept of boosting the performance of a model or system. By iteratively improving the predictive power or accuracy of a weak learner through the aggregation of multiple weak models, boosting effectively boosts the overall performance of the ensemble model. The process involves giving more weight to misclassified instances in each iteration, thereby focusing on areas where the model performs poorly and gradually enhancing its predictive capabilities. The term “boosting” aptly captures this iterative enhancement process that elevates the overall performance of the model beyond what individual weak learners can achieve independently.

Why we use boosting?

Boosting is a popular technique in machine learning used to improve the performance of predictive models. It works by combining multiple weak learners to create a strong learner that can make more accurate predictions. The primary goal of boosting is to reduce bias and variance in the model, ultimately enhancing its predictive power and generalisation capabilities. By iteratively adjusting the weights of misclassified data points and focusing on areas where the model performs poorly, boosting helps to create a robust and high-performing predictive model that can effectively handle complex datasets and improve overall prediction accuracy.

How do you use boosting?

Boosting is a powerful machine learning technique that combines multiple weak learners to create a strong predictive model. In practice, boosting works by sequentially training a series of models, where each subsequent model focuses on correcting the errors made by its predecessor. By assigning higher weights to misclassified instances, boosting iteratively improves the overall predictive performance of the model. This iterative process continues until a predefined number of models are created or until a certain level of accuracy is achieved. Ultimately, using boosting effectively involves understanding its underlying principles, tuning hyperparameters for optimal performance, and applying it to various classification and regression tasks to enhance predictive accuracy and generalisation capabilities.

When should you use boosting?

Boosting is a powerful technique in machine learning that is commonly used when aiming to improve the performance of predictive models. It is particularly beneficial when dealing with complex datasets or when seeking to enhance the accuracy of classification or regression tasks. Boosting works by combining multiple weak learners to create a strong ensemble model, iteratively adjusting the weights of misclassified instances to focus on improving prediction accuracy. By employing boosting, you can effectively address issues such as overfitting, improve model generalisation, and achieve higher predictive performance in challenging scenarios where individual models may struggle to deliver accurate results.